What is the Hadoop Architecture?

Inroduction

In the rapidly evolving landscape of data management and analytics, one name that stands out prominently is Hadoop. It’s not just a buzzword; it’s a game-changer. With the explosive growth of data in recent years, organizations have had to reevaluate their strategies for storing, processing, and analyzing vast amounts of information. Hadoop, an open-source framework. At Kelly Technologies, we provide comprehensive Hadoop Training in Hyderabad to help students acquire the right skill set. Emerged as a powerful solution to tackle these challenges. In this blog post, we’ll delve into the intricacies of Hadoop architecture to shed light on how it revolutionizes data management.

What is Hadoop?

Hadoop is a distributed storage and processing framework designed to handle vast volumes of data efficiently. It was created by Doug Cutting and Mike Cafarella in 2005 and is now maintained by the Apache Software Foundation. At its core, Hadoop is all about scalability, fault tolerance, and flexibility.

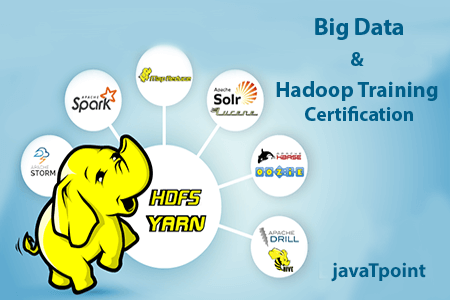

The Core Components

The Hadoop architecture comprises a variety of components, each playing a distinct role in the data processing pipeline. Let’s explore some of the fundamental ones:

Hadoop Distributed File System (HDFS): HDFS is the backbone of Hadoop. It divides large files into smaller blocks and stores multiple copies of these blocks across different nodes in a cluster. This redundancy ensures data availability even in the face of hardware failures.

MapReduce: MapReduce is a programming model and processing engine for parallel data processing. It’s the workhorse of Hadoop, responsible for dividing tasks across nodes and aggregating the results.

YARN (Yet Another Resource Negotiator): YARN is the resource management layer of Hadoop. It handles resource allocation and job scheduling, ensuring efficient resource utilization.

How Does Hadoop Work?

Hadoop’s distributed architecture is designed for horizontal scalability. When a file is uploaded to HDFS, it’s broken into blocks (typically 128 MB or 256 MB each), and multiple replicas are created across the cluster for fault tolerance. MapReduce jobs are then executed across these blocks, allowing for parallel processing.

Here’s a simplified overview of how Hadoop works:

Data Ingestion: Data is loaded into HDFS, where it’s split into blocks and replicated across the cluster.

Map Phase: During this phase, data is processed in parallel across nodes. Each node works on a subset of the data and applies a user-defined map function to extract and transform relevant information.

Shuffle and Sort: The framework sorts and groups the map outputs by key, preparing the data for the reduce phase

.Reduce Phase: The reduce phase takes the sorted and grouped data and applies a user-defined reduce function to produce the final output.

Data Output: The final results are stored or used as needed.

Benefits of Hadoop Architecture

The Hadoop architecture offers several key benefits:

Scalability: Hadoop scales horizontally, making it easy to accommodate growing data volumes by adding more nodes to the cluster

Fault Tolerance: Hadoop’s data replication and resource management ensure high availability and fault tolerance.

Cost-Effectiveness: Hadoop runs on commodity hardware, reducing infrastructure costs.

Flexibility: The ecosystem of tools and libraries provides flexibility for various data processing tasks.

Conclusion

In the world of big data, Hadoop architecture has become a vital tool for organizations looking to harness the power of their data. Its distributed nature, fault tolerance, and scalability make it a game-changer for handling vast datasets and complex processing tasks. By understanding the core components and ecosystem, businesses can make informed decisions about how to leverage Hadoop to gain actionable insights from their data.

The article Hijamacups must have given you a clear idea of this concept. Hadoop is not just a technology; it’s a paradigm shift that enables data-driven innovation and growth in today’s data-centric world.